library(harp)

library(here)

library(scico)

library(dplyr)

library(forcats)

fcst_tmplt <- file.path(

"{fcst_model}",

"{YYYY}",

"{MM}",

"{DD}",

"{fcst_model}_lagged_6_h_subset_2_5km_{YYYY}{MM}{DD}T{HH}Z.nc"

)

fcst <- read_forecast(

2024021912,

"meps",

"pcp",

lead_time = seq(0, 12),

file_path = here("data", "netcdf"),

file_template = fcst_tmplt,

return_data = TRUE

)Spatial Verification

Ensemble Fractions Skill Score

The ensemble fractions skill score is computed by ens_fss(). The function is experimental, meaning that the results have not been verified, and there are no specialised plotting functions for the score. Unlike other functions in harpSpatial it is designed to have the same API as the point verification functions. This means that the workflow follows the

- Read Forecast

- Read Observations

- Join

- Verify

- Plot

pattern. For this example we will use some precipitation from the MEPS model. We have data for 7 of the ensemble members for lead times 0 - 12 for a domain over the north of Norway. We can read it in with read_forecast().

Read Forecast

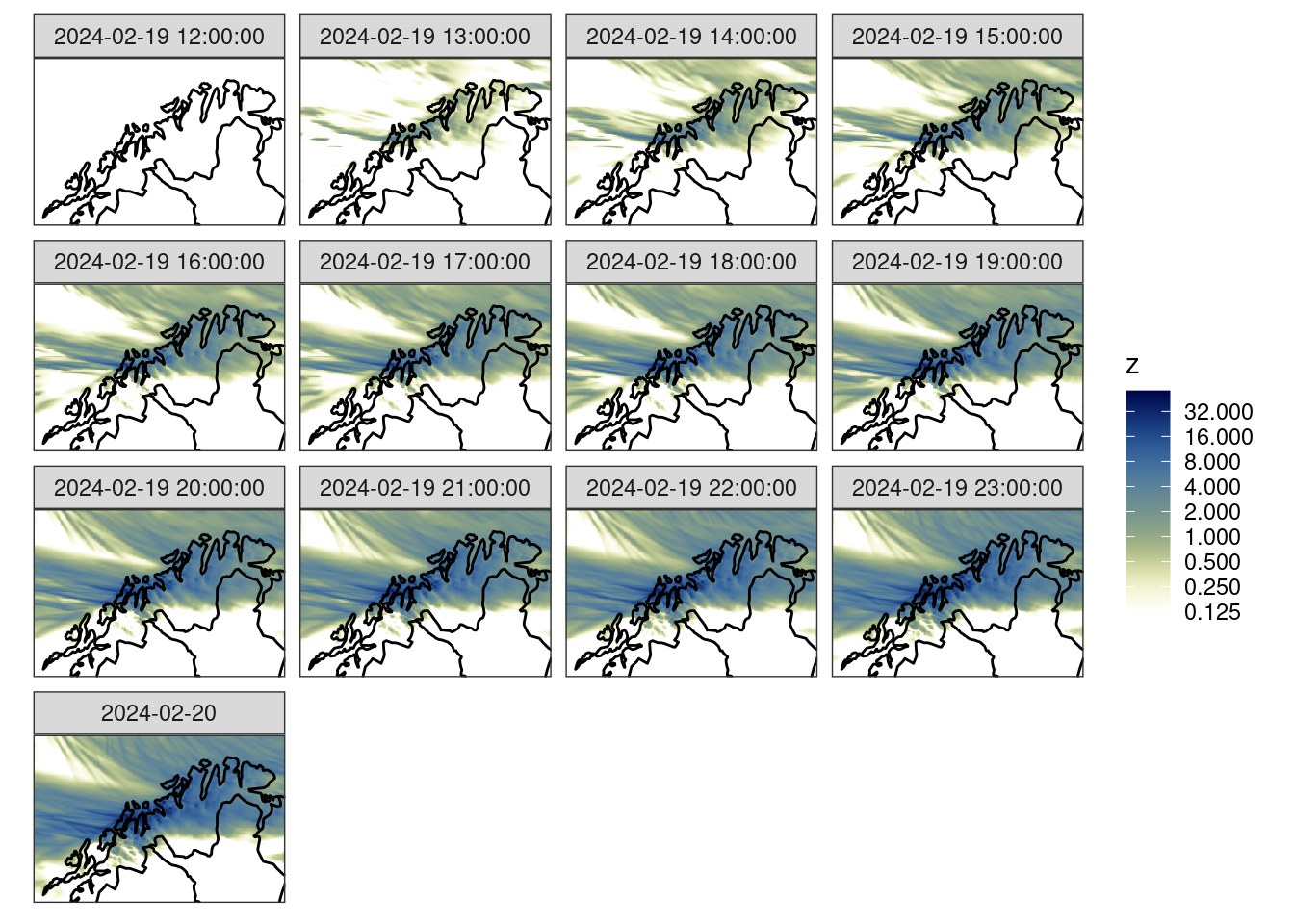

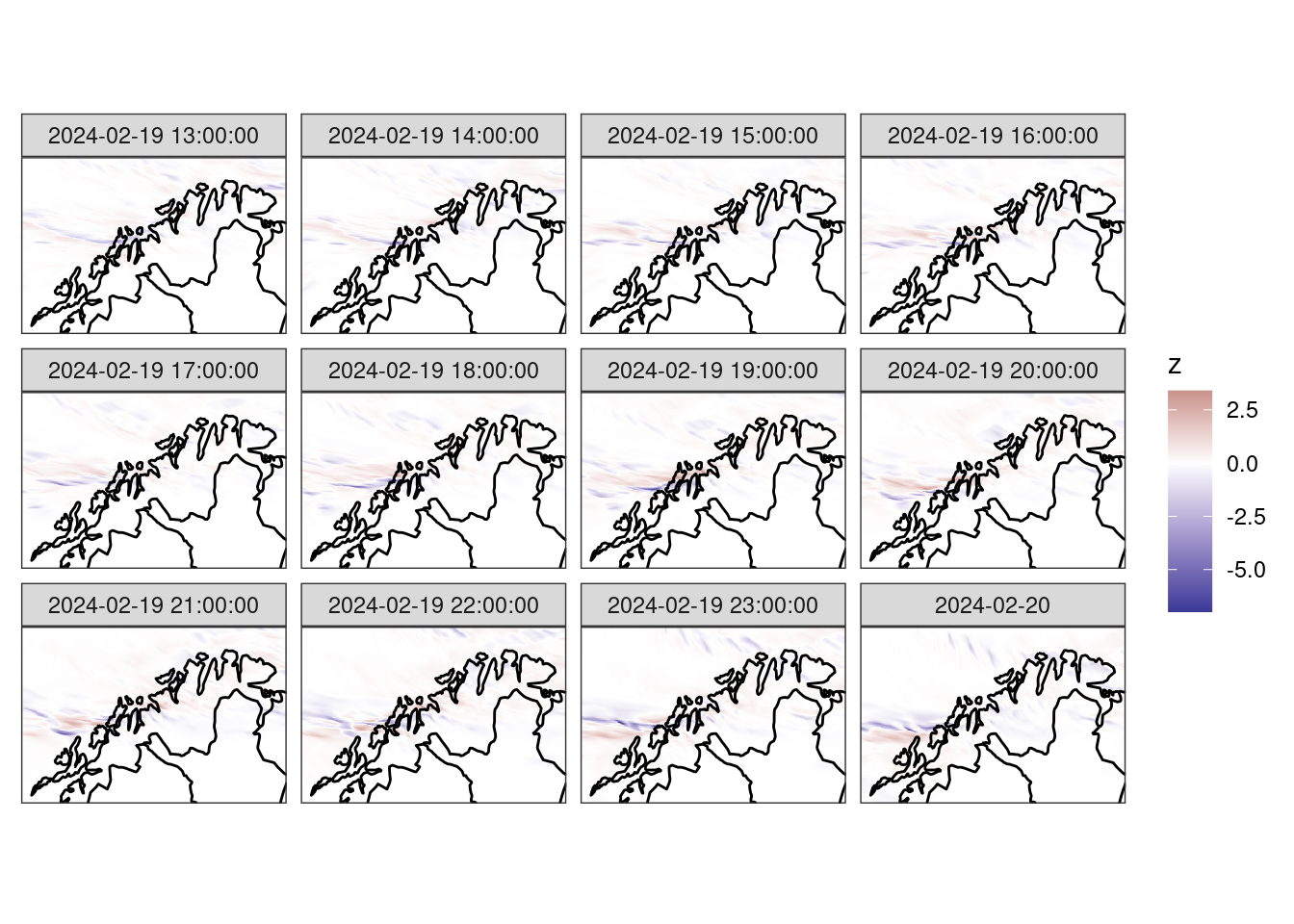

We can plot the data with ggplot() together with geom_georaster(). There will be more about these in the next tutorial…

fc_map <- get_map(get_domain(fcst$meps_mbr000), polygon = FALSE)

ggplot() +

geom_georaster(aes(geofield = meps_mbr000), fcst) +

geom_path(aes(x, y), fc_map) +

scale_fill_gradientn(

colours = scico(256, palette = "davos", direction = -1),

trans = "log",

limits = c(0.125, NA),

breaks = seq_double(0.125, 12),

na.value = "transparent"

) +

facet_wrap(~valid_dttm) +

coord_equal(expand = FALSE) +

theme_harp_map()Warning in scale_fill_gradientn(colours = scico(256, palette = "davos", :

log-2.718282 transformation introduced infinite values.

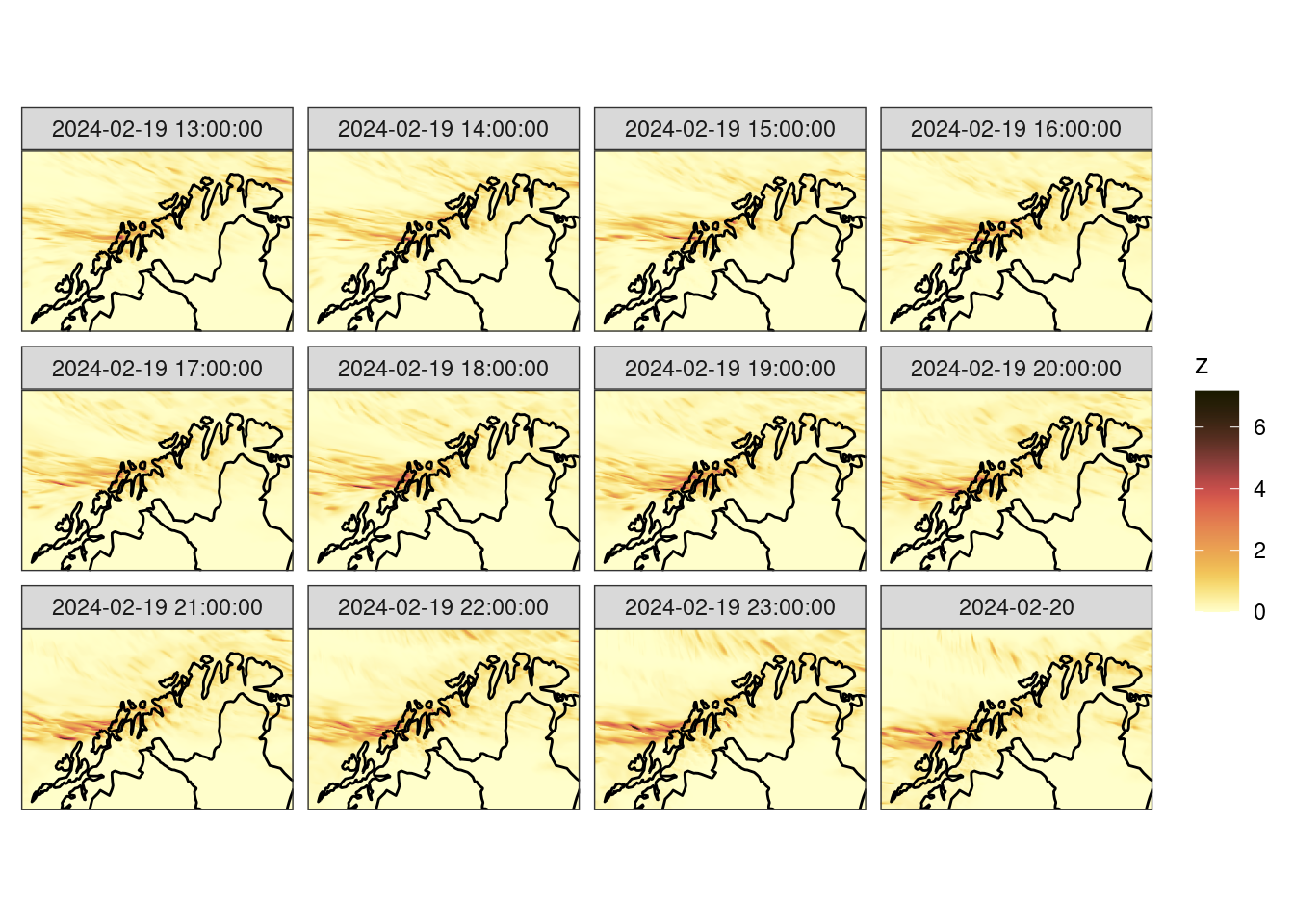

Since the data are from a forecast model, they are accumulated from time zero. For the verification we are going to want 1-hour accumulations. We can calculate these with decum().

fcst <- decum(fcst, 1) |>

filter(lead_time > 0)

ggplot() +

geom_georaster(aes(geofield = meps_mbr000), fcst) +

geom_path(aes(x, y), fc_map) +

scale_fill_gradientn(

colours = scico(256, palette = "davos", direction = -1),

trans = "log",

limits = c(0.125, NA),

breaks = seq_double(0.125, 12),

na.value = "transparent"

) +

facet_wrap(~valid_dttm) +

coord_equal(expand = FALSE) +

theme_harp_map()Warning in transformation$transform(x): NaNs producedWarning in scale_fill_gradientn(colours = scico(256, palette = "davos", :

log-2.718282 transformation introduced infinite values.

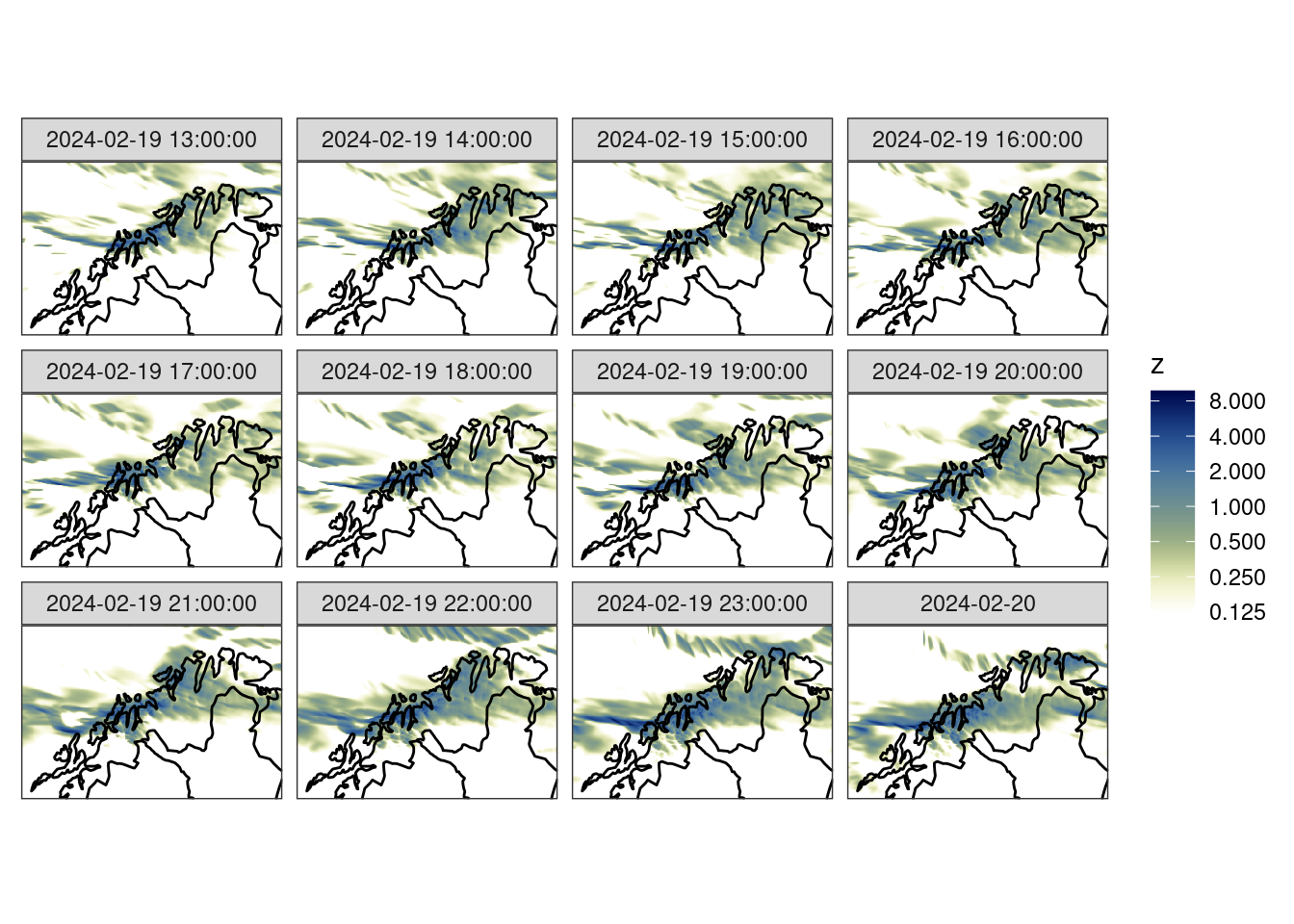

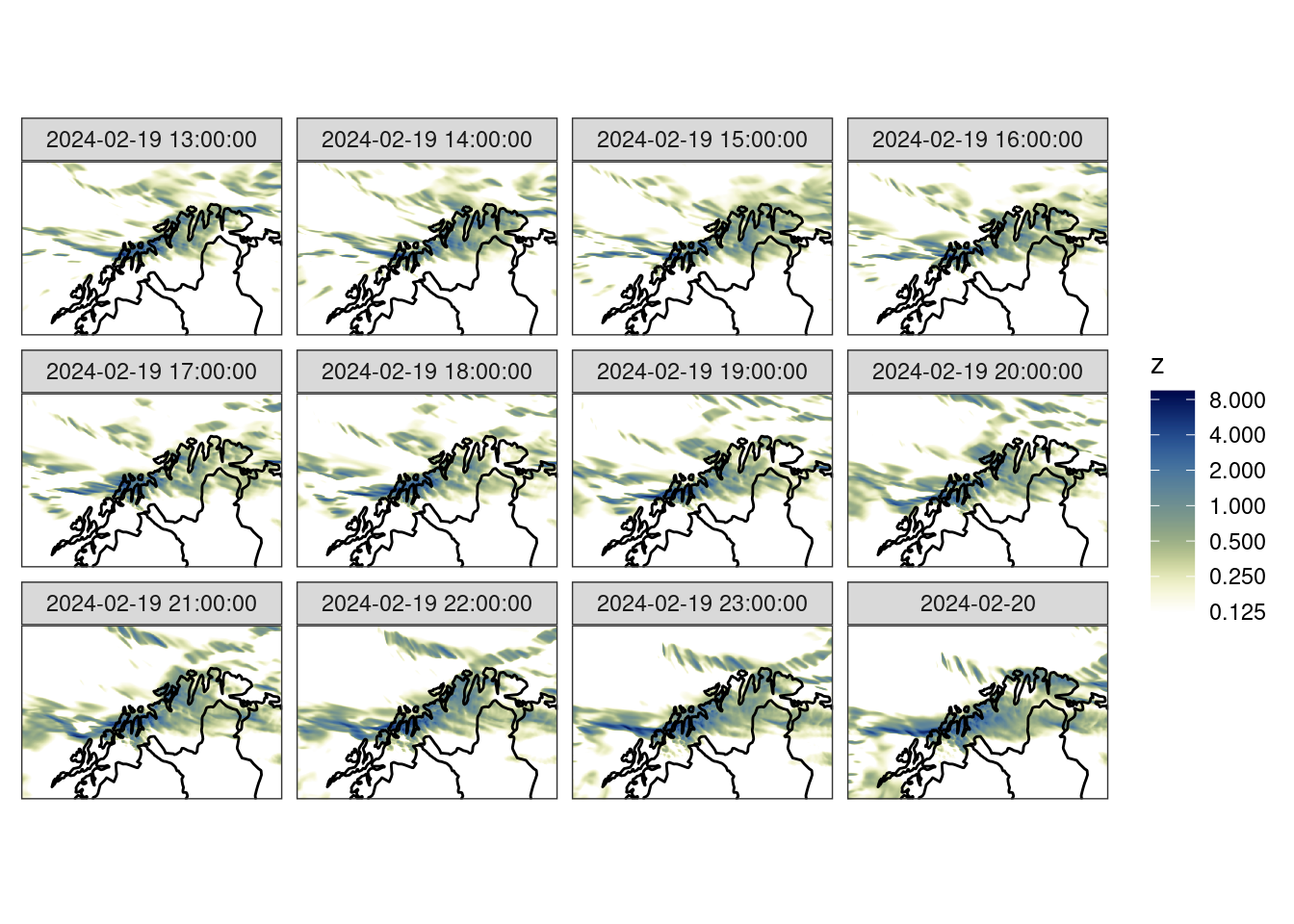

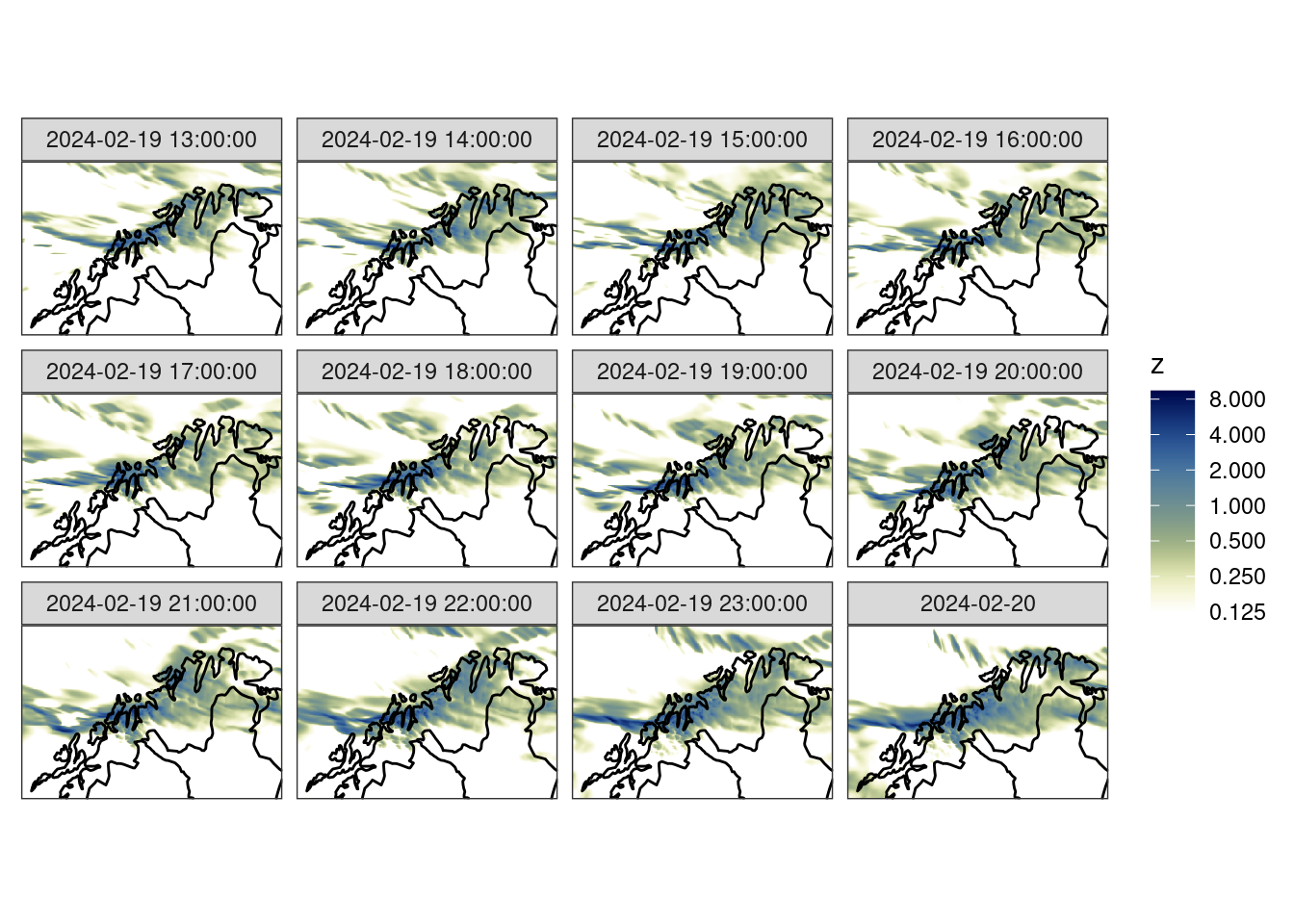

Read Analysis

We are going to verify these against the MET Nordic analysis that has a 1 km resolution. For the purposes of this tutorial we have cut the domain to a similar area over northern Norway. We can read the data with read_analysis(), which works much in the same way as read_forecast(), except that the dttm argument is the valid date-time of the data.

anl_tmplt <- file.path(

"{fcst_model}",

"{fcst_model}_1_0km_nordic_{YYYY}{MM}{DD}T{HH}Z.nc"

)

anl <- read_analysis(

dttm = unique_valid_dttm(fcst),

analysis_model = "met_analysis",

parameter = "precipitation_amount",

file_path = here("data", "netcdf"),

file_template = anl_tmplt,

file_format_opts = netcdf_opts(proj4_var = "projection_lcc")

)

anl_map <- get_map(get_domain(anl$anl), polygon = FALSE)ggplot() +

geom_georaster(aes(geofield = anl), anl) +

geom_path(aes(x, y), anl_map) +

scale_fill_gradientn(

colours = scico(256, palette = "davos", direction = -1),

trans = "log",

limits = c(0.125, NA),

breaks = seq_double(0.125, 12),

na.value = "transparent"

) +

facet_wrap(~valid_dttm) +

coord_equal(expand = FALSE) +

theme_harp_map()

However, we can’t verify the forecasts against the the analyses since they are on different domains. We have two options here. Firstly we could use geo_regrid() to regrid the analyses to the forecast grid. Notice how the dimensions in the anl column change to match those of fcst.

# before

anl::gridded analysis:: # A tibble: 12 × 7

anl_model parameter valid_dttm level_type level units anl

* <chr> <chr> <dttm> <chr> <dbl> <chr> <geolist>

1 met_analysis precipit… 2024-02-19 13:00:00 unknown NA kg/m… [901 × 601]

2 met_analysis precipit… 2024-02-19 14:00:00 unknown NA kg/m… [901 × 601]

3 met_analysis precipit… 2024-02-19 15:00:00 unknown NA kg/m… [901 × 601]

4 met_analysis precipit… 2024-02-19 16:00:00 unknown NA kg/m… [901 × 601]

5 met_analysis precipit… 2024-02-19 17:00:00 unknown NA kg/m… [901 × 601]

6 met_analysis precipit… 2024-02-19 18:00:00 unknown NA kg/m… [901 × 601]

7 met_analysis precipit… 2024-02-19 19:00:00 unknown NA kg/m… [901 × 601]

8 met_analysis precipit… 2024-02-19 20:00:00 unknown NA kg/m… [901 × 601]

9 met_analysis precipit… 2024-02-19 21:00:00 unknown NA kg/m… [901 × 601]

10 met_analysis precipit… 2024-02-19 22:00:00 unknown NA kg/m… [901 × 601]

11 met_analysis precipit… 2024-02-19 23:00:00 unknown NA kg/m… [901 × 601]

12 met_analysis precipit… 2024-02-20 00:00:00 unknown NA kg/m… [901 × 601]#after

geo_regrid(anl, fcst$meps_mbr000)::gridded analysis:: # A tibble: 12 × 7

anl_model parameter valid_dttm level_type level units anl

<chr> <chr> <dttm> <chr> <dbl> <chr> <geolist>

1 met_analysis precipit… 2024-02-19 13:00:00 unknown NA kg/m… [300 × 200]

2 met_analysis precipit… 2024-02-19 14:00:00 unknown NA kg/m… [300 × 200]

3 met_analysis precipit… 2024-02-19 15:00:00 unknown NA kg/m… [300 × 200]

4 met_analysis precipit… 2024-02-19 16:00:00 unknown NA kg/m… [300 × 200]

5 met_analysis precipit… 2024-02-19 17:00:00 unknown NA kg/m… [300 × 200]

6 met_analysis precipit… 2024-02-19 18:00:00 unknown NA kg/m… [300 × 200]

7 met_analysis precipit… 2024-02-19 19:00:00 unknown NA kg/m… [300 × 200]

8 met_analysis precipit… 2024-02-19 20:00:00 unknown NA kg/m… [300 × 200]

9 met_analysis precipit… 2024-02-19 21:00:00 unknown NA kg/m… [300 × 200]

10 met_analysis precipit… 2024-02-19 22:00:00 unknown NA kg/m… [300 × 200]

11 met_analysis precipit… 2024-02-19 23:00:00 unknown NA kg/m… [300 × 200]

12 met_analysis precipit… 2024-02-20 00:00:00 unknown NA kg/m… [300 × 200]The alternative is to do the regridding at read time. This would generally be the more sensible approach since it saves on reading unnecessary data into memory.

anl <- read_analysis(

dttm = unique_valid_dttm(fcst),

analysis_model = "met_analysis",

parameter = "precipitation_amount",

file_path = here("data", "netcdf"),

file_template = anl_tmplt,

file_format_opts = netcdf_opts(proj4_var = "projection_lcc"),

transformation = "regrid",

transformation_opts = regrid_opts(get_domain(fcst$meps_mbr000))

)ggplot() +

geom_georaster(aes(geofield = anl), anl) +

geom_path(aes(x, y), fc_map) +

scale_fill_gradientn(

colours = scico(256, palette = "davos", direction = -1),

trans = "log",

limits = c(0.125, NA),

breaks = seq_double(0.125, 12),

na.value = "transparent"

) +

facet_wrap(~valid_dttm) +

coord_equal(expand = FALSE) +

theme_harp_map()

Join and Basic Scores

Now, just like in the point verification workflow, we can join the forecast and analysis. Here we have to take care that columns that aren’t common but don’t matter to the join are excluded otherwise the join will return an empty data frame. We can exclude the offending columns using dplyr’s select function.

fcst <- join_to_fcst(

fcst,

select(anl, -parameter, -level_type, -level)

)At this point we could compute some basic scores, like bias or RMSE (there are no harp functions to do this yet, but it’s quite straightforward using dplyr’s mutate() in combination with ens_stats()).

bias <- mutate(

ens_stats(fcst, sd = FALSE),

ens_bias = ens_mean - anl

) |>

select(-ens_mean, -anl)

rmse <- mutate(

fcst,

across(contains("_mbr"), ~(.x - anl) ^ 2)

) |>

ens_stats(sd = FALSE) |>

mutate(

ens_rmse = sqrt(ens_mean)

) |>

select(-ens_mean, -anl)ggplot() +

geom_georaster(aes(geofield = ens_bias), bias) +

geom_path(aes(x, y), fc_map) +

scale_fill_gradient2(

low = scales::muted("blue"),

high = scales::muted("red"),

) +

facet_wrap(~valid_dttm) +

coord_equal(expand = FALSE) +

theme_harp_map()

ggplot() +

geom_georaster(aes(geofield = ens_rmse), rmse) +

geom_path(aes(x, y), fc_map) +

scale_fill_gradientn(

colours = scico(256, palette = "lajolla", direction = -1)

) +

facet_wrap(~valid_dttm) +

coord_equal(expand = FALSE) +

theme_harp_map()

Computing the Ensemble Fractions Skill Score

We can now compute the ensemble fractions skill score. There are three different scores we can compute these are summarised below:

| Function | Score |

|---|---|

ens_fss() |

mean FSS of the ensemble as a whole |

ens_efss() |

error FSS - the mean FSS of the ensemble mean |

ens_dfss() |

dispersion FSS - the mean FSS of each ensemble member against all other ensemble members |

The latter two can be thought of as the spatial equivalents of the skill and spread of the ensemble respectively.

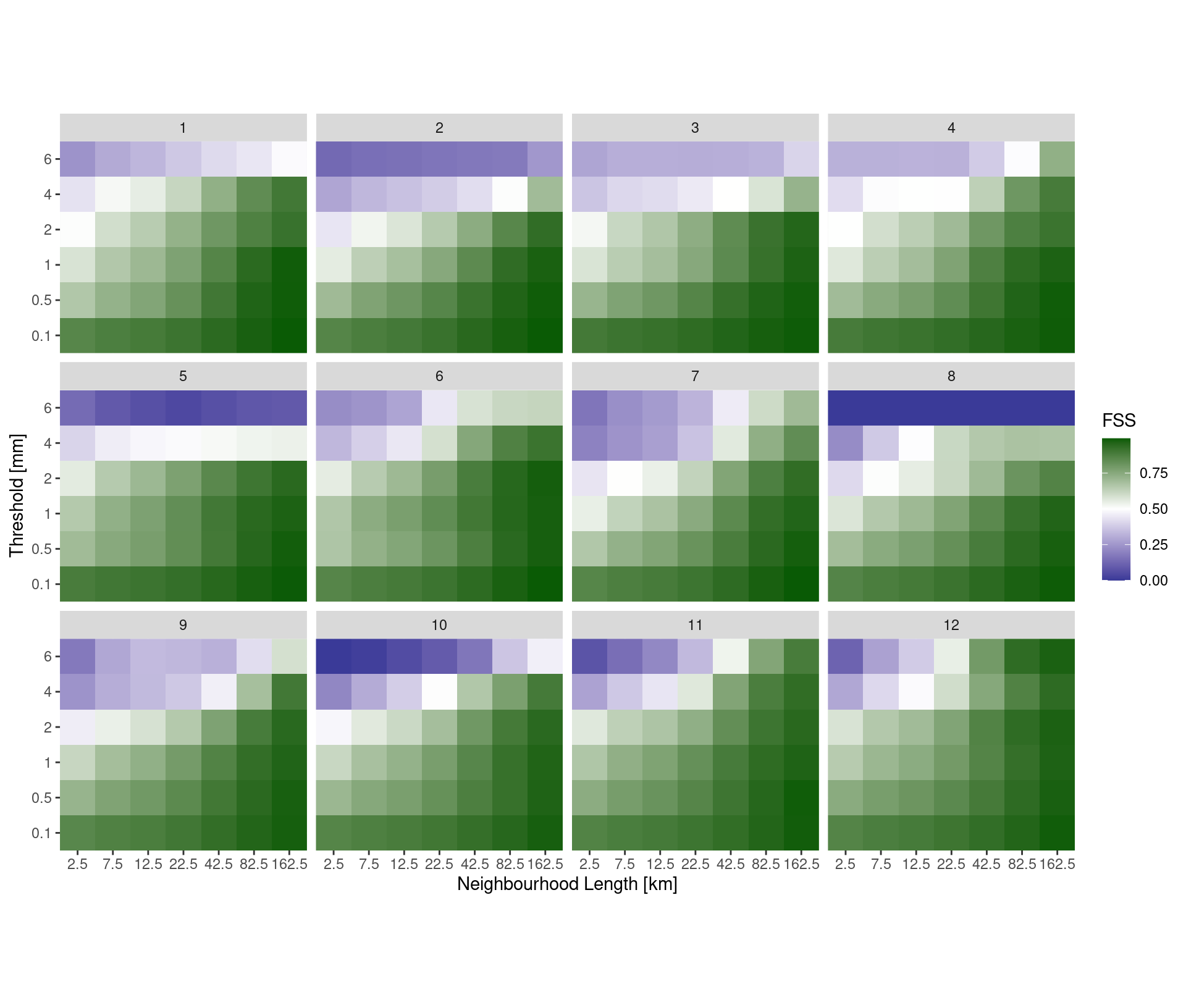

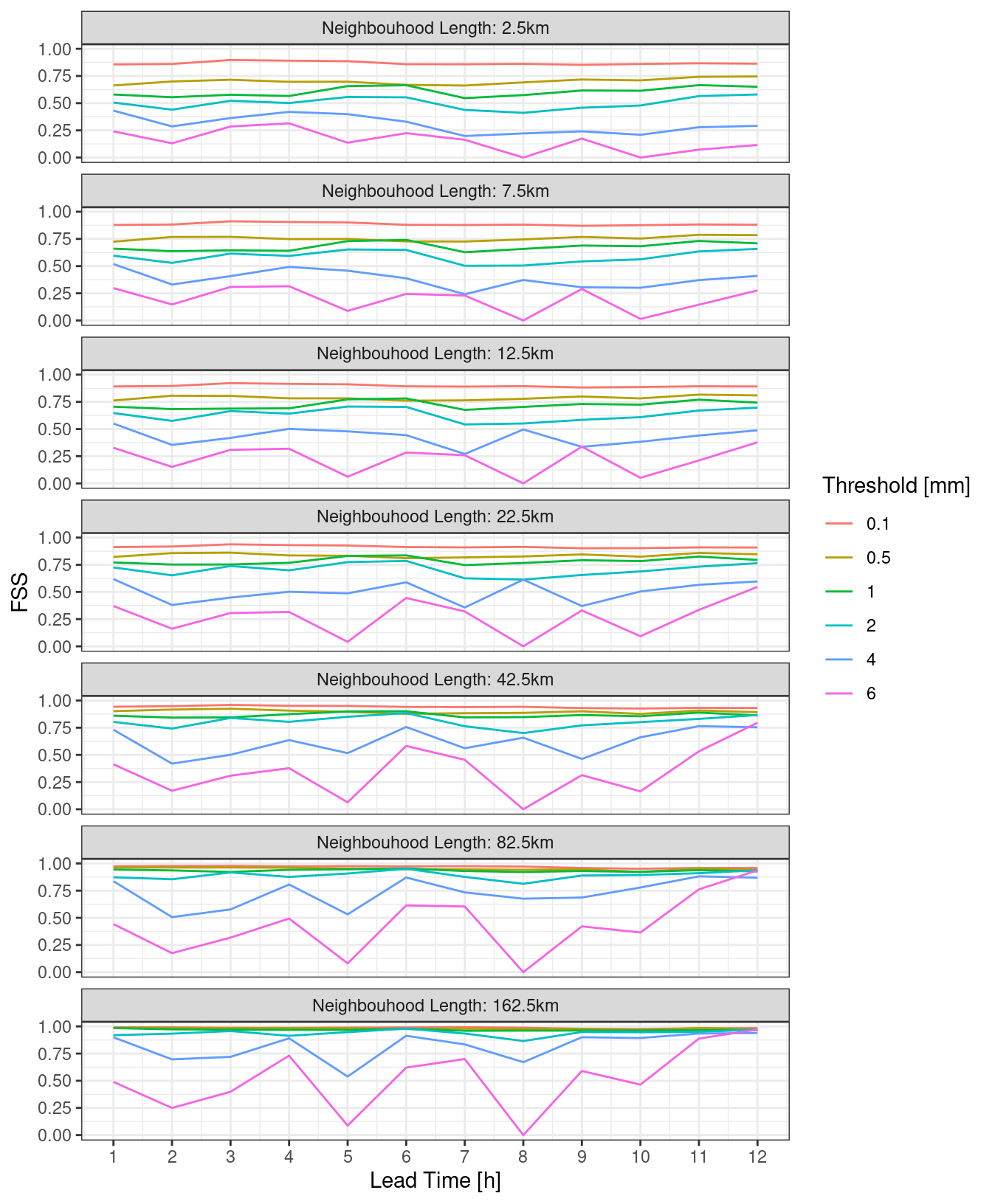

When calculating the FSS we need to provide two pieces of information - the thresholds for which to compute the FSS (by default it is done for forecast >= threshold and analysis >= threshold, but the comparator argument can be used to choose different comparisons with the threshold), and the radii of the neighbourhoods to compute the FSS. The radius of a neighbourhood is the number of grid squares in each direction from a central grid square, thus a radius of 1 results in a 3 x 3 neighbourhood.

We will calculate each of the scores with thresholds of 0.1, 0.5, 1, 2, 4 and 6 mm for neighbourhood radii of 0, 1, 2, 4, 8 and 16 and grid squares.

thresh <- c(0.1, 0.5, 1, 2, 4, 6)

rad <- c(0, seq_double(1, 6))

pcp_fss <- ens_fss(fcst, anl, thresh ,rad)

pcp_efss <- ens_efss(fcst, anl, thresh ,rad)

pcp_dfss <- ens_dfss(fcst, thresh ,rad)A typical way to plot the fractions skill score is as a raster, with thresholds on one axis and neighbourhood lengths on the other, with each square coloured according to the fss.

ggplot(

pcp_fss,

aes(

fct_inseq(as.character(nbhd_length / 1000)),

fct_inseq(as.character(threshold)),

fill = fss

)

) +

geom_raster() +

facet_wrap(~lead_time) +

scale_fill_gradient2(

midpoint = 0.5,

low = scales::muted("blue"),

high = scales::muted("green")

) +

labs(

x = "Neighbourhood Length [km]",

y = "Threshold [mm]",

fill = "FSS"

) +

coord_equal(expand = FALSE)

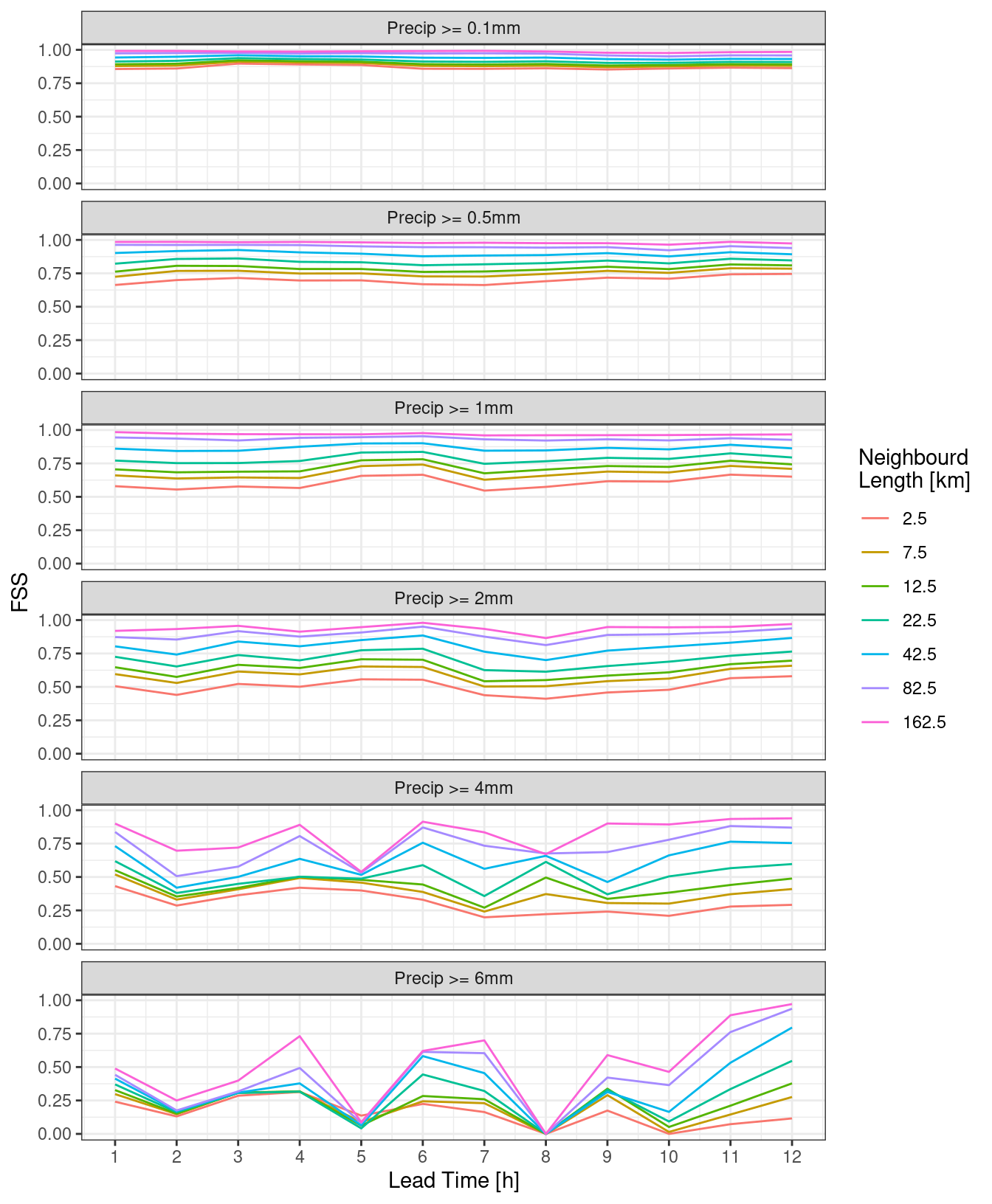

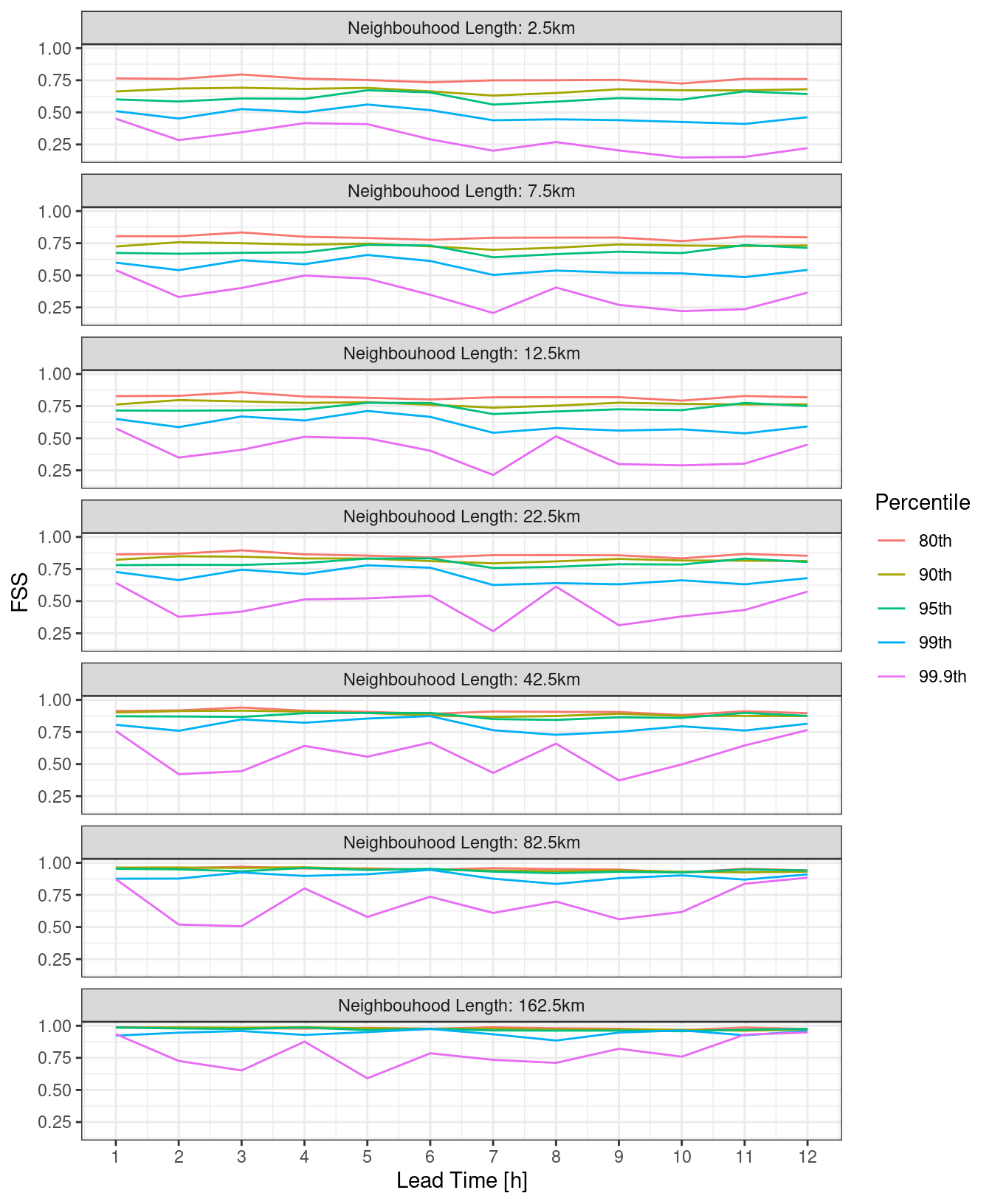

However, it may be clearer to make a line plot facted by either neighbourhood length or threshold.

ggplot(

pcp_fss,

aes(

lead_time,

fss,

colour = fct_inseq(as.character(nbhd_length / 1000))

)

) +

geom_line() +

facet_wrap(

~fct_reorder(paste0("Precip >= ", threshold, "mm"), threshold),

ncol = 1

) +

labs(

x = "Lead Time [h]",

y = "FSS",

colour = "Neighbourd\nLength [km]"

) +

scale_x_continuous(breaks = seq(0, 12)) +

theme_bw()

ggplot(

pcp_fss,

aes(

lead_time,

fss,

colour = fct_inseq(as.character(threshold))

)

) +

geom_line() +

facet_wrap(

~fct_reorder(

paste0("Neighbouhood Length: ", nbhd_length / 1000, "km"),

nbhd_length

),

ncol = 1

) +

labs(

x = "Lead Time [h]",

y = "FSS",

colour = "Threshold [mm]"

) +

scale_x_continuous(breaks = seq(0, 12)) +

theme_bw()

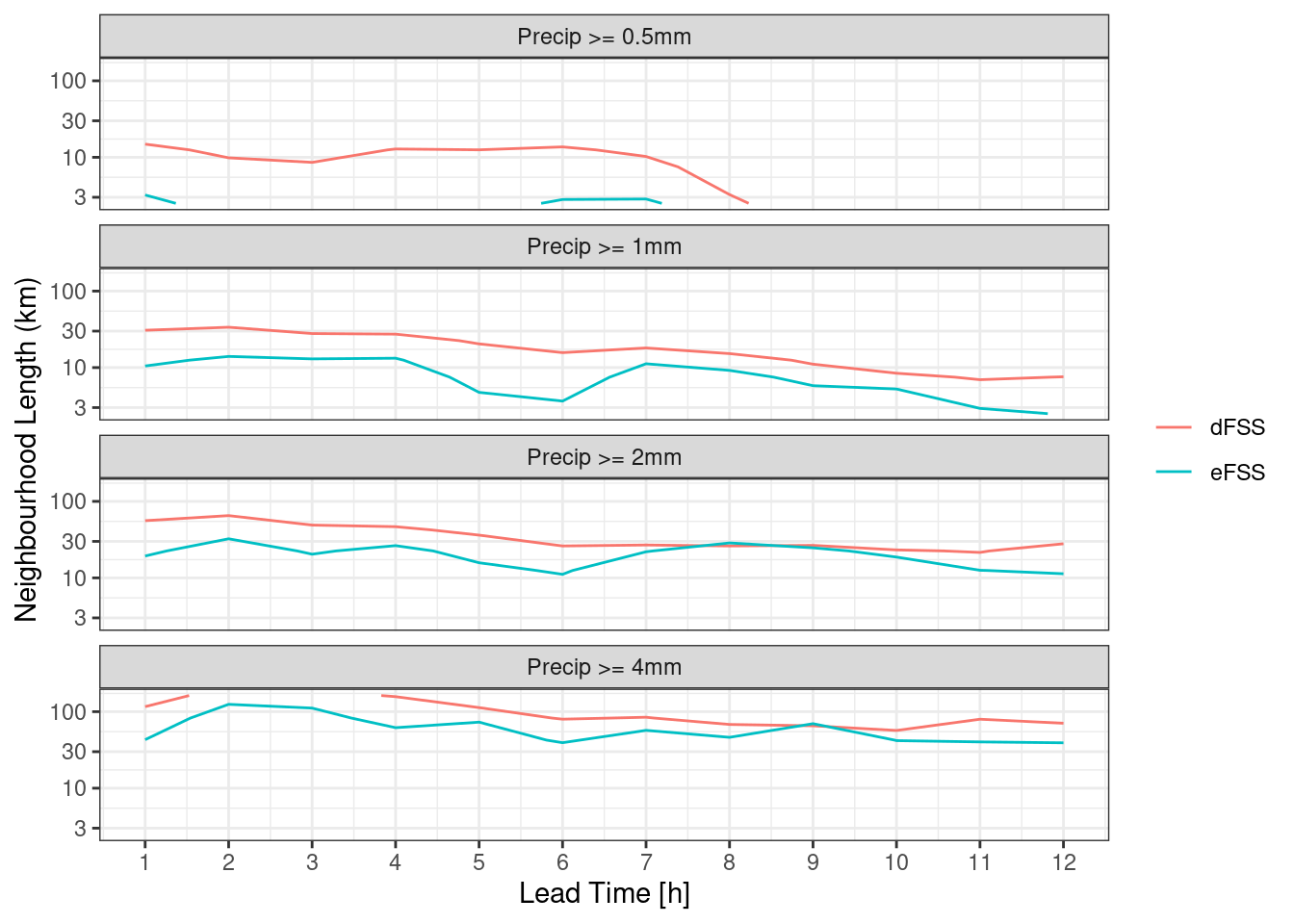

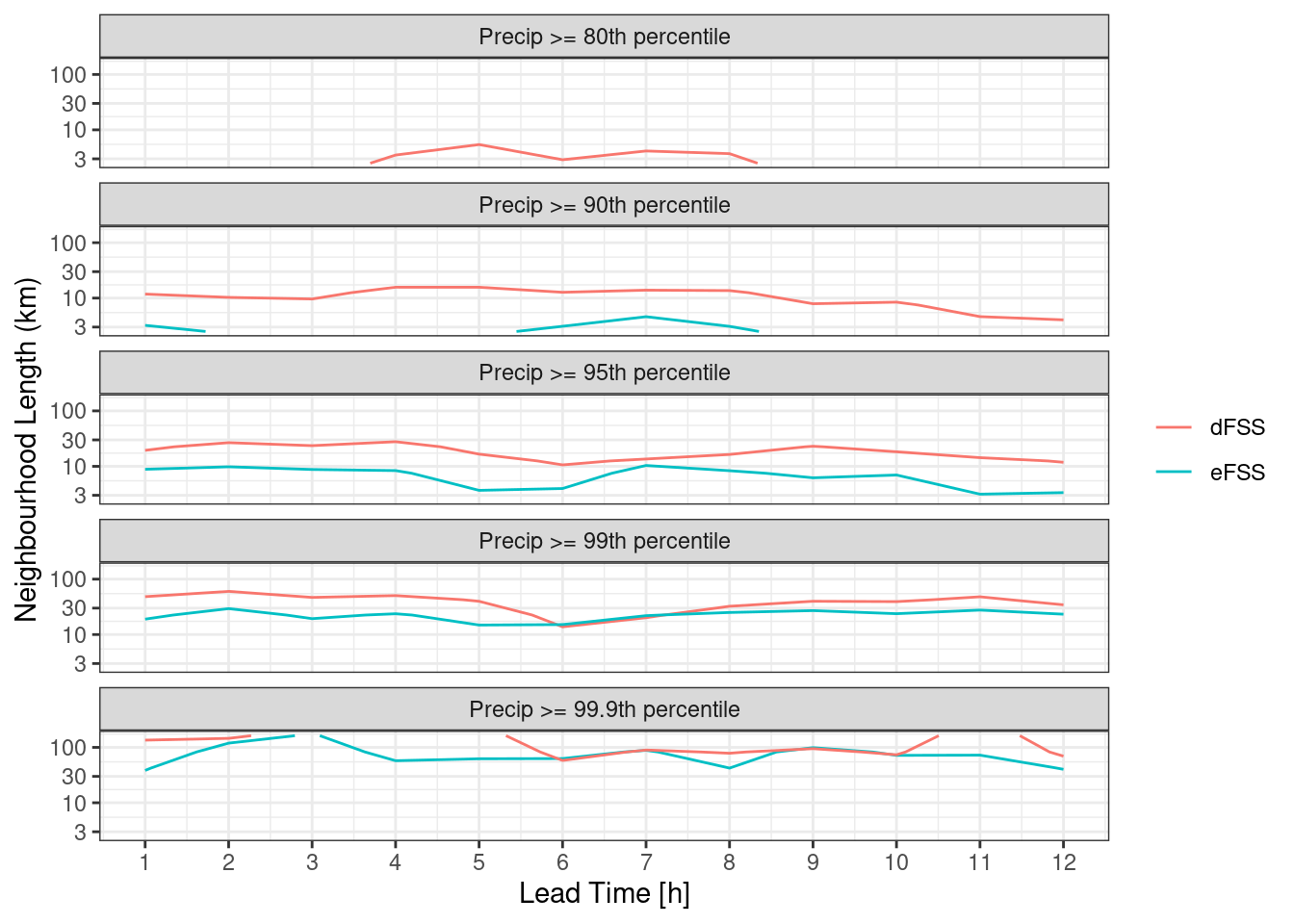

For the eFSS and dFSS, the important piece of information is for what neighbourhood length the FSS becomes skilful. A rule of thumb is that a “skilful” FSS is a value >= 0.5. We can plot the FSS = 0.5 contour for both eFSS and dFSS. In order to do this, we need to bind the two data frames and make sure each has a column saying what it is.

pcp_fss_ed <- bind_rows(

mutate(pcp_dfss, score = "dFSS"),

mutate(pcp_efss, score = "eFSS")

)

ggplot(

filter(pcp_fss_ed, between(threshold, 0.5, 4)),

aes(lead_time, nbhd_length / 1000, z = fss, colour = score)

) +

geom_contour(breaks = 0.5) +

facet_wrap(

~fct_reorder(paste0("Precip >= ", threshold, "mm"), threshold),

ncol = 1

) +

scale_y_log10() +

labs(

x = "Lead Time [h]",

y = "Neighbourhood Length (km)",

colour = NULL

) +

scale_x_continuous(breaks = seq(0, 12)) +

theme_bw()

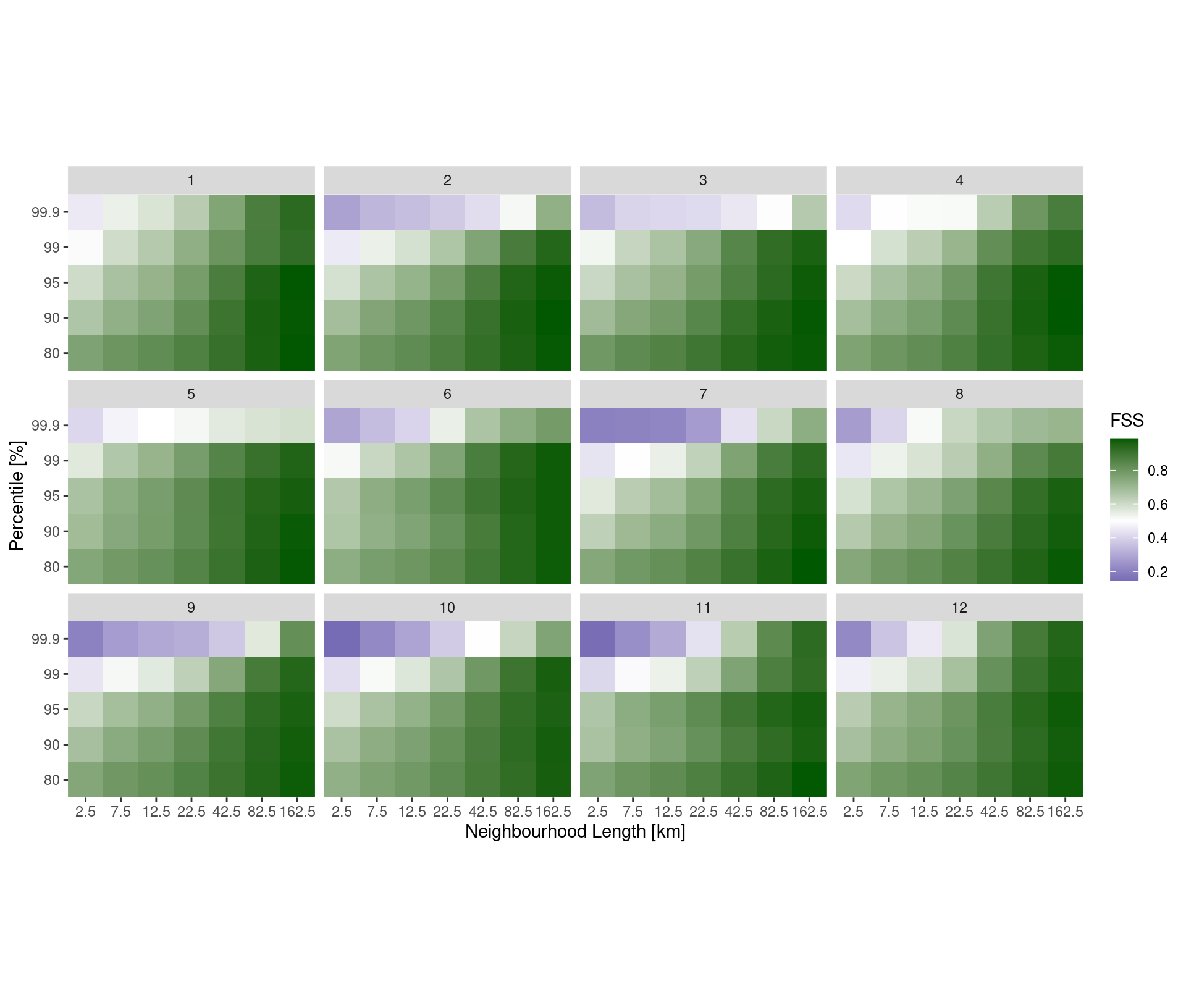

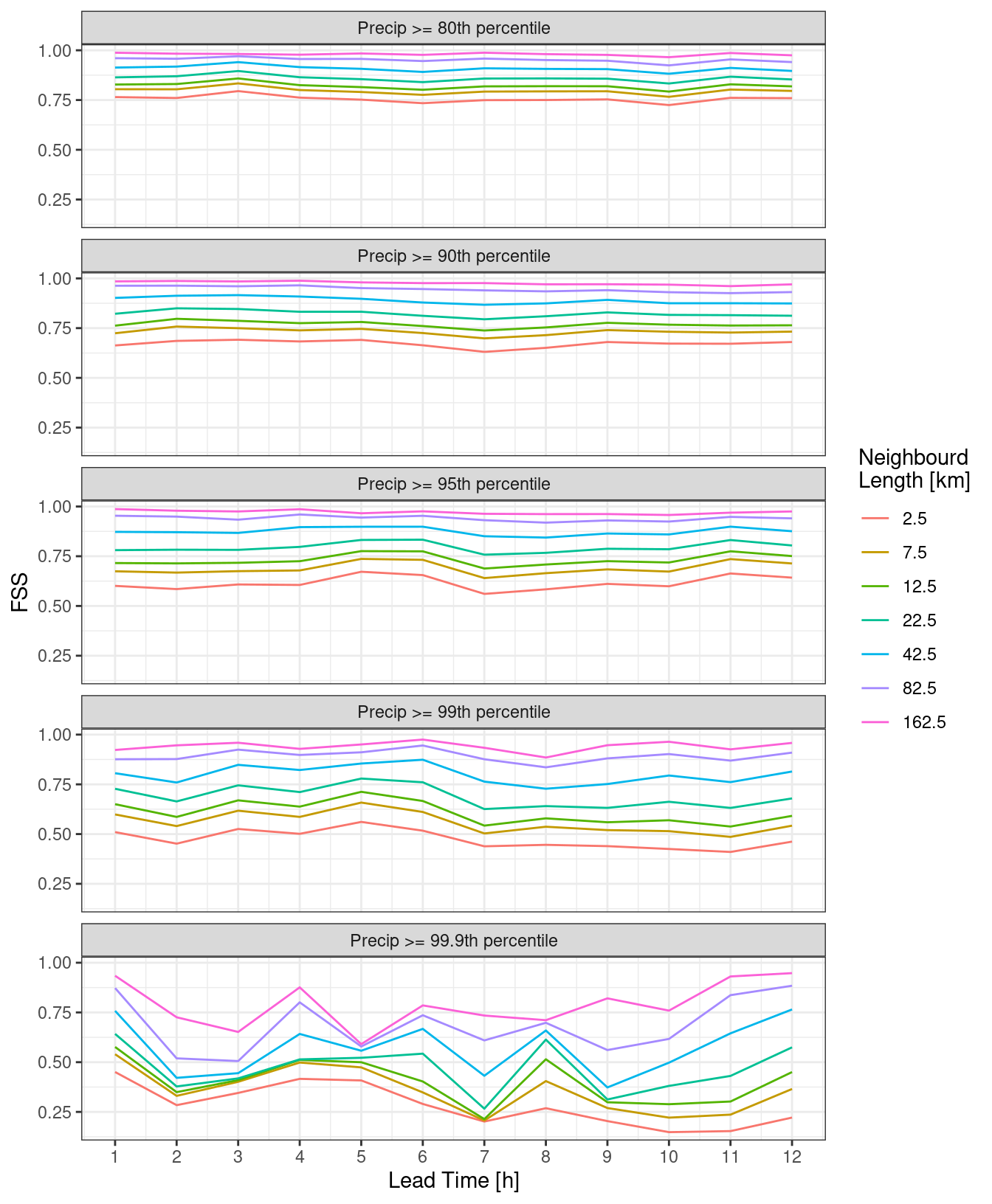

Quantiles for Thresholds

As well as computing the ensemble fractions skill score for absolute thresholds, it is also possible to use quantiles to set the threshold. This is done for each analysis field and it is simply a case of providing the thresholds argument with values between 0 and 1 preceded by a “q”.

thresh <- paste0("q", c(0.8, 0.9, 0.95, 0.99, 0.999))

rad <- c(0, seq_double(1, 6))

pcp_fss <- ens_fss(fcst, anl, thresh ,rad)

pcp_efss <- ens_efss(fcst, anl, thresh ,rad)

pcp_dfss <- ens_dfss(fcst, thresh ,rad)And then we can make similar plots using quantile instead of threshold.

ggplot(

pcp_fss,

aes(

fct_inseq(as.character(nbhd_length / 1000)),

fct_inseq(as.character(quantile * 100)),

fill = fss

)

) +

geom_raster() +

facet_wrap(~lead_time) +

scale_fill_gradient2(

midpoint = 0.5,

low = scales::muted("blue"),

high = scales::muted("green")

) +

labs(

x = "Neighbourhood Length [km]",

y = "Percentile [%]",

fill = "FSS"

) +

coord_equal(expand = FALSE)

ggplot(

pcp_fss,

aes(

lead_time,

fss,

colour = fct_inseq(as.character(nbhd_length / 1000))

)

) +

geom_line() +

facet_wrap(

~fct_reorder(paste0("Precip >= ", quantile * 100, "th percentile"), quantile),

ncol = 1

) +

labs(

x = "Lead Time [h]",

y = "FSS",

colour = "Neighbourd\nLength [km]"

) +

scale_x_continuous(breaks = seq(0, 12)) +

theme_bw()

ggplot(

pcp_fss,

aes(

lead_time,

fss,

colour = fct_reorder(paste0(quantile * 100, "th"), quantile)

)

) +

geom_line() +

facet_wrap(

~fct_reorder(

paste0("Neighbouhood Length: ", nbhd_length / 1000, "km"),

nbhd_length

),

ncol = 1

) +

labs(

x = "Lead Time [h]",

y = "FSS",

colour = "Percentile"

) +

scale_x_continuous(breaks = seq(0, 12)) +

theme_bw()

For the eFSS and dFSS, the important piece of information is for what neighbourhood length the FSS becomes skilful. A rule of thumb is that a “skilful” FSS is a value >= 0.5. We can plot the FSS = 0.5 contour for both eFSS and dFSS. In order to do this, we need to bind the two data frames and make sure each has a column saying what it is.

pcp_fss_ed <- bind_rows(

mutate(pcp_dfss, score = "dFSS"),

mutate(pcp_efss, score = "eFSS")

)

ggplot(

pcp_fss_ed,

aes(lead_time, nbhd_length / 1000, z = fss, colour = score)

) +

geom_contour(breaks = 0.5) +

facet_wrap(

~fct_reorder(paste0("Precip >= ", quantile * 100, "th percentile"), quantile * 100),

ncol = 1

) +

scale_y_log10() +

labs(

x = "Lead Time [h]",

y = "Neighbourhood Length (km)",

colour = NULL

) +

scale_x_continuous(breaks = seq(0, 12)) +

theme_bw()

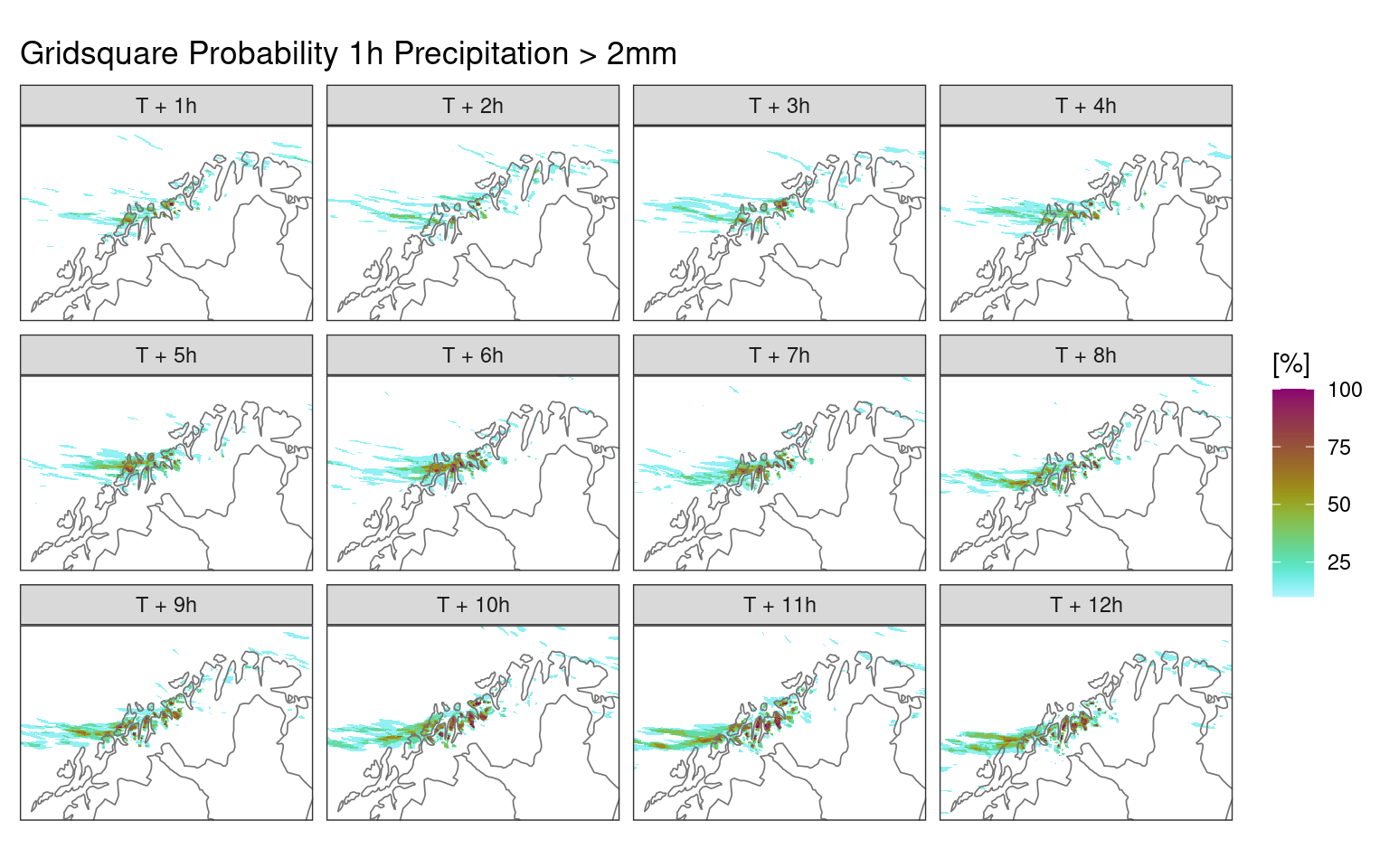

Neighbourhood Probabilities

The ensemble dFSS can be used to inform the spatial scale to use for upscaling precipitation probabilities. Since the ensemble dFSS gives an indication of the spatial uncertainty of a forecast, you could use that same neighbourhood size to upscale forecasts of precipitation probabilities. If, as above, we assume that the neighbourhood size for which the dFSS goes above 0.5 is the spatial uncertainty we can keep increasing the neighbourhood size untile that value is reached. This is best done in a while loop nested in a for loop over each lead time.

prob_upscale <- function(lt, thresh) {

rad <- -1

dfss <- 0

while (dfss < 0.5) {

rad <- rad + 1

dfss <- ens_dfss(filter(fcst, lead_time == lt), thresh, rad)$fss[[1]]

}

message(lt, "h: ", rad)

tibble(

lead_time = lt,

radius = rad

)

}

nbhd_radii <- lapply(seq(1, 12), prob_upscale, thresh = 2) |>

bind()

nbhd_radii# A tibble: 12 × 2

lead_time radius

<int> <dbl>

1 1 11

2 2 13

3 3 10

4 4 9

5 5 7

6 6 5

7 7 5

8 8 5

9 9 5

10 10 5

11 11 4

12 12 6We can now use this information to upscale the probabilities. First let’s make a plot of the grid sqaure probabilities against which to compare. We can use ens_prob() to compute the grid sqaure probabilities,

ggplot() +

geom_georaster(aes(geofield = prob_ge_2 * 100), ens_prob(fcst, 2)) +

geom_path(aes(x, y), fc_map, colour = "grey45", linewidth = 0.3) +

facet_wrap(~fct_reorder(paste0("T + ", lead_time, "h"), lead_time)) +

scale_fill_scico(

"[%]",

palette = "hawaii",

direction = -1,

limits = c(10, 100),

na.value = "transparent"

) +

coord_equal(expand = FALSE) +

labs(title = "Gridsquare Probability 1h Precipitation > 2mm") +

theme_harp_map()

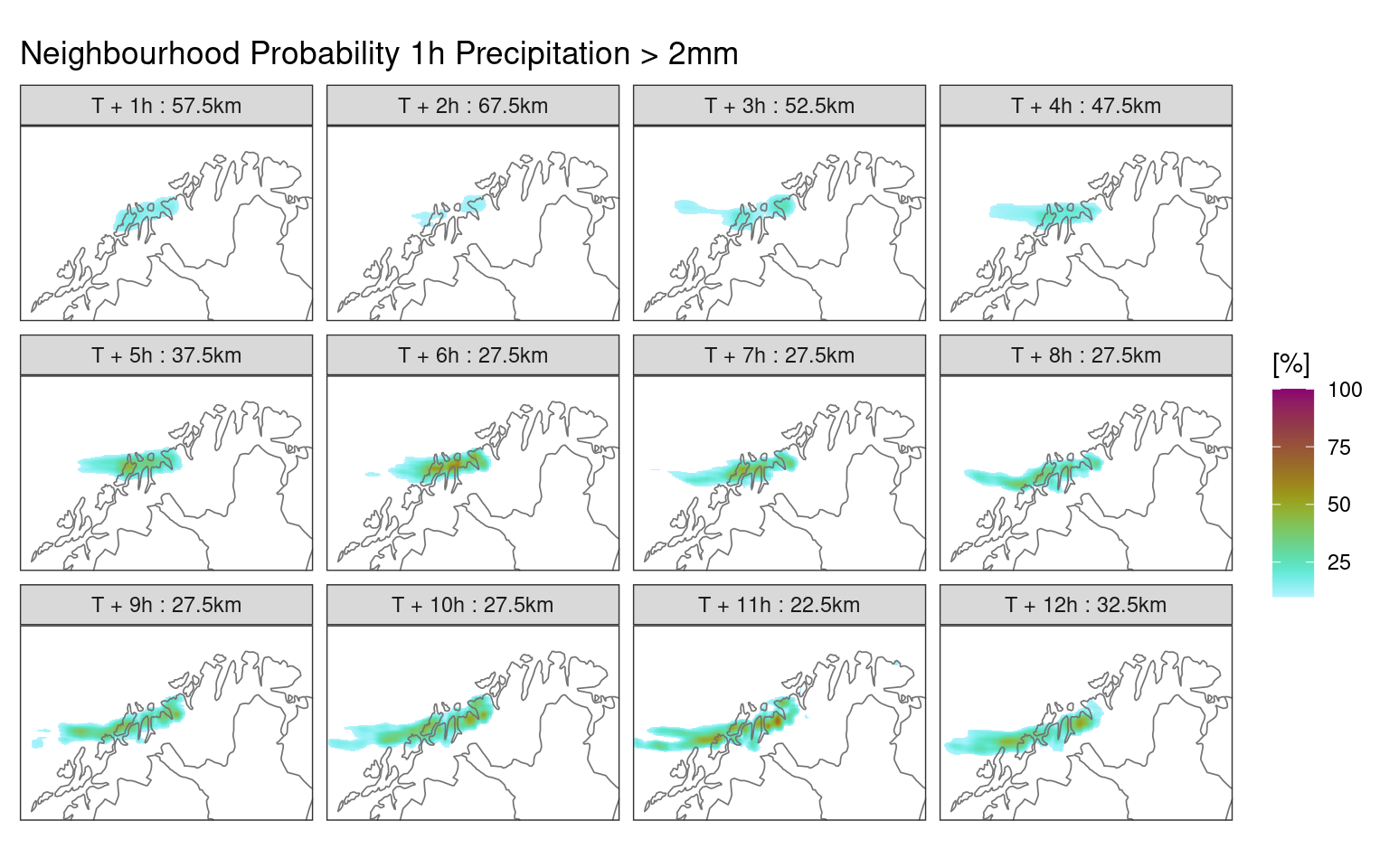

To do the neighbourhood upscaling, we first need to join our new nbhd_radii data frame to the forecast

fcst <- join_to_fcst(fcst, nbhd_radii, force = TRUE)And now we can use nbhd_smooth() to do the upscaling for each member column and calculate the mean with ens_stats() to get the upscaled probabilities.

nbhd_prob <- mutate(

fcst,

across(

contains("_mbr"),

~nbhd_smooth(.x, radius, threshold = 2)

)

) |>

ens_stats(sd = FALSE)nbhd_smooth()

This function smooths geofields using a moving kernel. When it is given a threshold, it first computes the binary probabilities of threshold exceedence before smoothing.

The neighbourhood probabilities are now in the ens_mean column.

ggplot() +

geom_georaster(aes(geofield = ens_mean * 100), nbhd_prob) +

geom_path(aes(x, y), fc_map, colour = "grey45", linewidth = 0.3) +

facet_wrap(

~fct_reorder(

paste0("T + ", lead_time, "h : ", (radius * 2 + 1) * 2.5, "km"),

lead_time

)

) +

scale_fill_scico(

"[%]",

palette = "hawaii",

direction = -1,

limits = c(10, 100),

na.value = "transparent"

) +

coord_equal(expand = FALSE) +

labs(title = "Neighbourhood Probability 1h Precipitation > 2mm") +

theme_harp_map()

In this tutorial, as well as going through the workflow to compute the ensemble fractions skill score, we have seen some methods for plotting data in harp that do not use the built in specialised functions. In the next tutorial we will look more closely at plotting harp data with ggplot().